We have received funding NSF for our project, Learning Joint Crowd-Space Embeddings for Cross-Modal Crowd Behavior Prediction.

Team: Vladimir Pavlovic (PI), Mubbasir Kapadia (Co-PI), Sejong Yoon (Co-PI)

Project website: https://project-nucleum.github.io/

Abstract:

Many

societal activities, including air transport, disaster remediation, social events such as protests, concerts, sports, or voting, require efficient and effective methodologies for monitoring, understanding, and reacting to behaviors of large concentrations of

people, the crowds, that give rise to those events. Simultaneously, the type and evolution of those behaviors are intimately tied to the form and function of the environments where they occur. As crowds increase in size or change their actions in response to

intrinsic or extrinsic factors, it is critical for the built environments, including their future designs, to adapt to those changes. Present-day technological tools aim to analyze and predict the link between crowds and environments. However, they rely on

rigid, hand-tuned, computationally costly simulation models, severely limiting their practical utility. This project seeks to bridge this gap by devising a novel way of modeling the inherent relationship between the structure and semantics of complex environments,

and the presence and behavior of its human occupants, from small groups to dense crowds. The main goal is to predict crowd behavior accurately, from microscopic motion to aggregate crowd dynamics, in novel, never-before-seen environment configurations using

Neuro-Cognitive Modeling of Environments and Humans (NUCLEUM) to replace the computationally expensive yet often mismatched-with-reality physical simulations. Project details can be found on https://project-nucleum.github.io.

To

accomplish this goal, this project collaboratively seeks to tackle the problem of predicting crowd behavior in complex environments by learning data-driven models that will seamlessly “translate” between different representations of crowds and their environments.

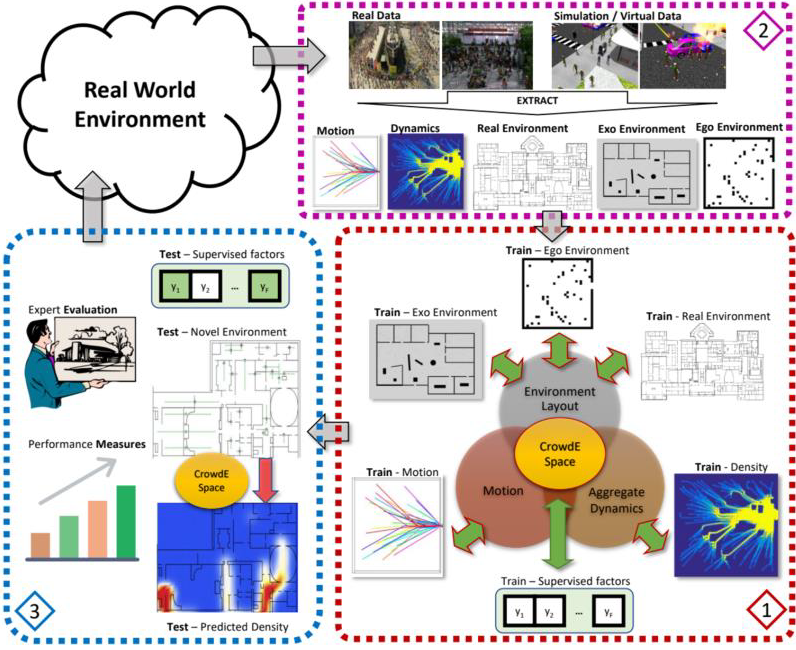

Specifically, this project will have three main research thrusts: (Thrust 1) Learning a Joint Crowd-Space Representation. The project will develop a novel multi-concept transfer learning framework to enable coupled learning across three highly heterogeneous

concepts: (a) environment layouts (e.g., floor plans), (b) macroscopic crowd properties (e.g., flow), and (c) microscopic crowd trajectories. Once learned, the framework will enable predictions of flow patterns of a crowd, directly from the layout of an environment

and vice versa. (Thrust 2) A Hybrid Multi-modal Corpus of Environment Contexts and Crowd Movement. This project will create a novel hybrid multi-modal corpus of environmental contexts and crowd behavior, which will leverage data from field observations, controlled

laboratory experiments, crowd simulations, and multi-user virtual reality platforms. This corpus will allow training models that generalize across the space of environment and crowd conditions. (Thrust 3) Model Evaluation, Applications, and Use Cases. Trained

models’ robustness will be evaluated in terms of their ability to produce valid crowd trajectories, which are statistically similar to ground truth observations while generalizing to the new, unseen crowd, and environmental contexts. This project will subsequently

apply the trained models in a variety of application contexts on real-world built and yet-to-be-built environments to predict crowd behavior in unseen environments, identify vulnerabilities in environments, and reconfigure environment designs to improve crowd

behavior.