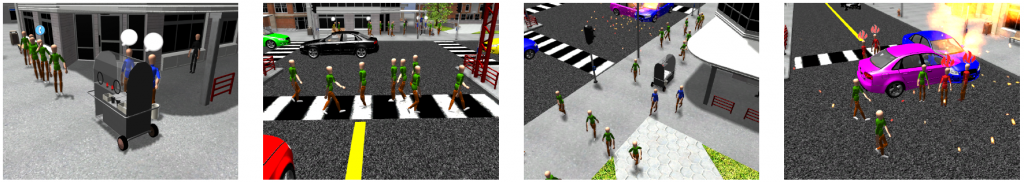

Purposeful interactive virtual worlds have widespread application in cinematic content creation, interactive entertainment, urban planning, as well as training, disaster, and security simulations. Autonomous goal-driven agents are essential for simulating environments (real or virtual) with a human populace. Despite many significant contributions from artificial intelligence, robotics, and computer animation, authoring and simulating complex, functional, purposeful agents is still a difficult problem.

There are two main challenges that we face. First, we need to provide end users with an easy-to-use interface to author individual actors in a scenario, as well as direct the narrative of the entire scenario. Second, we want the ability to simulate autonomous agents with their own capabilities, goals, and motivations, while still exhibiting complex interactions with the environment, other agents and user avatars — all in real-time. We have created an integrated framework for steering, navigation, group coordination, and behavior authoring for autonomous virtual humans in complex, dynamic virtual worlds.

Computational Models of Human Movement

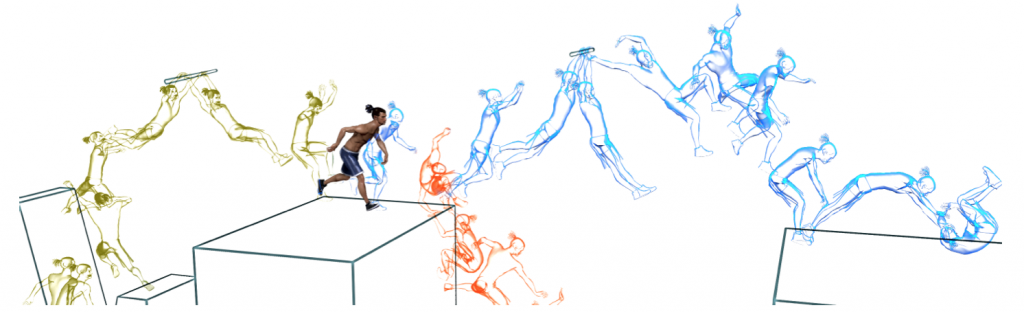

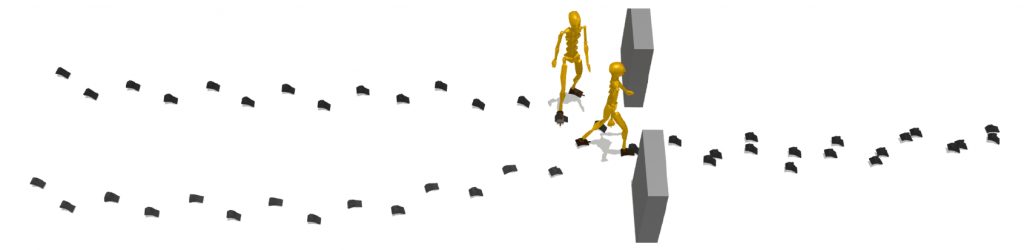

Our work uses a bio-mechanically based locomotion model to generate plausible footstep trajectories for human-like agents. We demonstrate nuanced locomotion behaviors such as side-stepping and careful foot placement that have not been shown before. We have developed a method to geometrically analyse an environment in order to inform the capabilities it affords to human (or robotic) agents for dexterous locomotion. Moving beyond just locomotion behaviors, our work explores methods to integrate personality in human movement.

Navigation and Goal-Directed Collision Avoidance

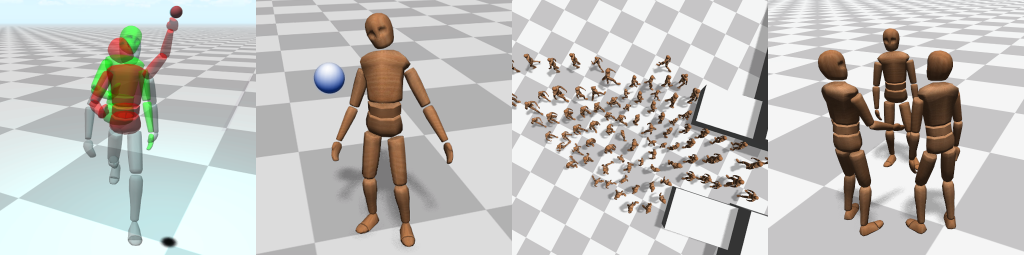

We have developed a novel variable resolution egocentric field representation to model perception and situation awareness in autonomous virtual humans. One of the main benefits of this approach is the ability to perform implicit space-time planning without the overhead of modeling time as a dimension. We recognized the need for hybrid techniques to solve the large space of challenging situations that steering agents encounter and proposed a method that combines planning, predictions, and reactions in one single framework. Our work explores the use of machine learning to automatically learn navigation and movement policies, and the use of multi-domain planning techniques to efficiently handle the high-dimensional space of multi-agent collision avoidance.

Behavior Authoring

We have developed a behavior authoring framework which provides the user with complete control over the domain of authoring animated virtual avatars. Actors are first created by defining their state and action space, then specialized using modifiers and constraints. Behaviors are used to define goals and objectives for an actor. A multi-actor heuristic search technique is used to generate complicated multi-actor interactions. Using this framework, we can model complex interactions where agents collaborate or compete with one another in an effort to achieve common or conflicting objectives. This work provides a strong foundation for developing the next generation of author-able, interactive, narrative virtual worlds.